Multi-Armed Bandits

What is a multi-armed bandit experiment?

A multi-armed bandit (MAB) approach combines exploration and exploitation in sequential experimental designs. In traditional experiments, researchers explore by testing all alternatives equally, evaluating outcomes only after the experiment has ended. In contrast, MAB experiments continuously learn from the data that arrives during the experiment and immediately exploit this information to improve allocation decisions. This means that such experiments not only generate knowledge but also earn on that knowledge while still learning.

The term multi-armed bandit originates from the metaphor of a gambler facing several slot machines (“one-armed bandits”) with unknown payout probabilities. The gambler must decide whether to keep playing the supposedly most rewarding machine (exploitation) or try others to learn about their reward potential (exploration). In experimental research, this trade-off mirrors the idea of allocating more observations to better-performing treatments while still learning about the rest.

Why are MAB experiments useful?

MAB experiments are particularly useful in settings with strong output orientation. This is clearly the case in medical research, where they allow patients to benefit from emerging evidence on effective treatments while the study is still ongoing. While it is usually not a matter of life and death, similar logic applies to field experiments with firms. Businesses often evaluate experiments by the additional output they generate rather than by their statistical power, which is what we as researchers typically care about. By balancing these two objectives, MAB experiments could help encourage more firms to engage in field experiments in the future.

Putting the MAB approach into practice

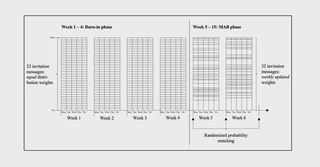

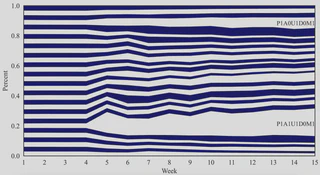

Together with Johannes Gaul, Florian Keusch, and Davud Rostam-Afschar, I applied a multi-armed bandit approach in a large-scale business survey experiment. We aimed to identify how to phrase an invitation message targeted at business decision-makers so that their likelihood of starting the survey would be maximized, which is particularly relevant given the typically low response rates in business surveys. Over a 15-week period, 176,000 firms opened one of 32 invitation message versions. Instead of implementing a fixed and balanced randomization scheme, we continuously updated the probability of sending each message version based on its observed starting rate, using a Bayesian decision rule known as randomized probability matching. This adaptive approach increased the number of survey starts by 6.66% compared to a traditional static experimental setup.

Click to view how the randomization scheme evolved throughout the experiment

Figure: Evolution of the distribution weights over the 15-week experimental period. Each interval represents the cumulative distribution share of one message version.

Learn more

For details, use the following resources (and feel free to reach out!):

- Our paper, forthcoming in Survey Research Methods: Invitation Messages for Business Surveys: A Multi-Armed Bandit Experiment.

- The replication package, which includes code to implement randomized probability matching and to reproduce all results.

- The original introduction of randomized probability matching: Scott, S. L. (2010). A modern Bayesian look at the multi-armed bandit. Applied Stochastic Models in Business and Industry, 26(6), 639–658.